Efficiently Merging fastText Models with MLflow for Enhanced NLP Pipelines

fastText is an efficient text classification and word representation library developed by Facebook AI Research and open sourced around 2016. Its underlying architecture uses CBOW and skip gram models in building word representations to do unsupervised and supervised natural language processings tasks. The code is very fast and achieves scalable solutions while processing large datasets quickly and accurately. I strongly advise reviewing Facebook's blog post and research paper to grasp the underlying motivation behind fastText and gain an understanding of its development and functionality. Although being almost 7 years old it still is a strong contender against modern NLP architectures like e.g. Transformers. FastText especially shines in terms of its capabilities to handle for example multi class classifications or tasks with multiple languages. Performing training and inference can be very fast compared to a Transformer setup and can already yield production ready results without much fine tuning.

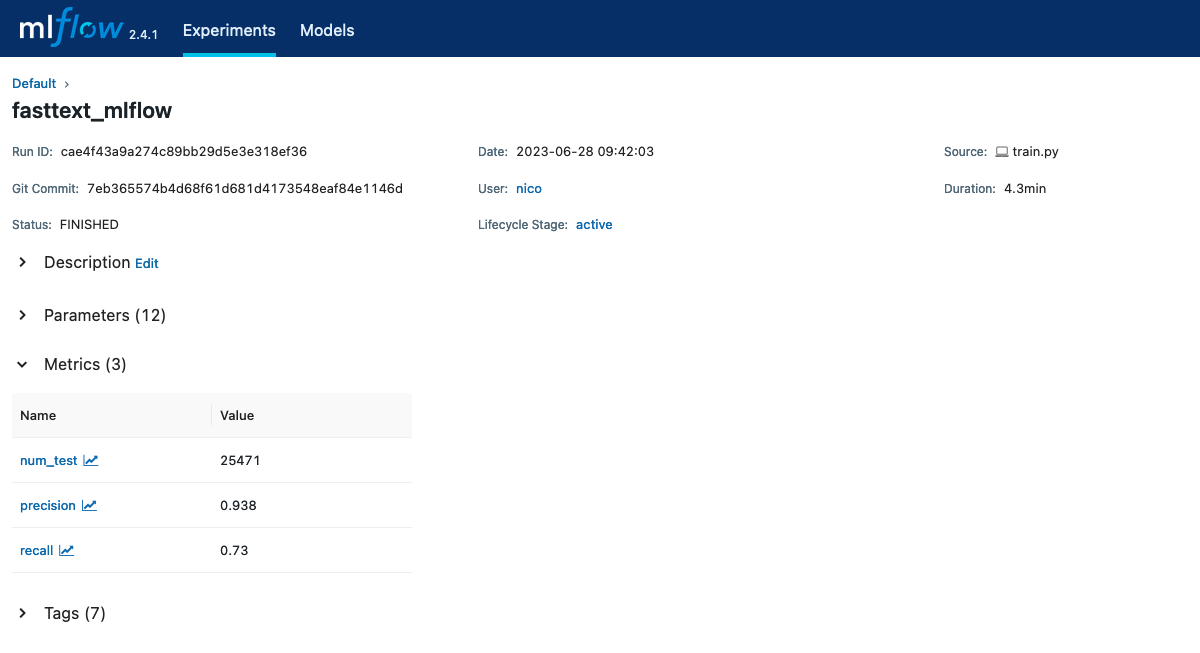

MLflow is an open source tool to manage the machine learning lifecycle by tracking experimentation, model metrics and offers a centralized space for models. As such it has been a valuable tool in our artificial intelligence driven projects.

A current project uses both fastText and MLflow. While the latter offers a wide

array of supported frameworks (like sklearn, pytorch, transformers, ... ) the

former is not on that list. This blog post highlights how to use MLflow's

pyfunc.PythonModel to build a Wrapper to use fastText with MLflow. The main

problem is that fastText models do not pickle inside mlflows log_model.

Therefore we need to create a Wrapper Class that handles train, storing and

loading. As an example the class could look like the following. Here we offer a

load_context() to load artifacts from the specified model. predict() is

needed to perform evaluate a pyfunc-compatible input and produces a

pyfunc-compatible output. As a bonus we added train() to have model specific

code in one place.

from mlflow.pyfunc import PythonModel

class FastTextMLFModel(PythonModel):

"""

A Wrapper class for mlflow to train, test and predict a fasttext model.

[Fasttext](https://fasttext.cc/) is a Library for efficient text classification and representation learning open sourced by meta.

"""

def __init__(self, params):

self.params = params

def load_context(self):

"""

Load a model from a model_location

"""

self.model = fasttext.load_model(self.params["model_location"])

def clear_context(self):

self.model = None

def train(self):

"""

Train a fasttext model with the provided parameters.

Returns:

model_path: the path the models was saved to

"""

self.model = fasttext.train_supervised(

**{k:v for k,v in self.params.items() if k not in ['model_location', 'test_input']} # exclude not fasttext default model params

)

self.model.save_model(self.params["model_location"])

return self.params["model_location"]

def predict(self, text: str, threshold: float, k: int = -1) -> tuple:

"""

Predict based on the model

Args:

text (str): The text to predict

threshold (float): threshold to cut off the predictions

k (int, Optional): how many predictions -1 indicates as many as possible

Returns:

res: tuple containing at index 0 the predictions and at index 1 the corresponding scores

"""

return self.model.predict(text, k, threshold)

def test(self, test_input: str = None, threshold=0.65) -> dict:

"""

Test the model with fasttext internal test function

Args:

test_input (str, Optional): A Path to a test file, if None is provided it is assumed that the params has a 'test_input' field.

threshold (float, Optional): A threshold to cut of predictions

Returns:

results (dict): results of fasttext internal test function

"""

results = self.model.test(

test_input if test_input else self.params['test_input'], k=-1, threshold=threshold

)

return {

"num_test": int(results[0]),

"precision": float(results[1]),

"recall": float(results[2]),

}

Now we can use this Wrapper Class inside MLflow's run context:

import mlflow

from mlflow.models.signature import ModelSignature

from mlflow.types.schema import Schema, ColSpec

from app.model import FastTextMLFModel

with mlflow.start_run(run_name=f"fasttext-model") as run:

# set tags

mlflow.set_tag("run_id", run.info.run_id)

# log parameters

mlflow.log_params(params)

# fasttext model

fasttextMLF = FastTextMLFModel(params)

# train model

model_location = fasttextMLF.train()

# test and log metrics

path_to_test_file = "test_file.txt"

metrics = fasttextMLF.test(path_to_test_file)

mlflow.log_metrics(metrics)

name = "a-name"

input_schema = Schema(

[

ColSpec("string", "Text and labels (in format __label__)"),

]

)

output_schema = Schema([ColSpec("string"), ColSpec("float")])

signature = ModelSignature(inputs=input_schema, outputs=output_schema)

fasttextMLF.clear_context() # the model is saved via fasttext: out_path

mlflow.pyfunc.log_model(

artifact_path=name,

artifacts={"fasttext_model_path": model_location},

python_model=fasttextMLF,

signature=signature,

code_path=["./app"],

registered_model_name=name,

)

Now a model is stored as an artifact inside the artifact directories and we can leverage MLflow's full capabilities to track, store and deploy our fastText model.